- Home

- Resource Center

- Articles & Videos

- The Challenges and Triumphs of Annotating Indian Languages

16 September 2024

The Challenges and Triumphs of Annotating Indian Languages

Please note: The organization, grammar, details and presentation of this article were improved by the use of AI.

For years, humans have been conveying their thoughts, emotions and expressions with each other through natural language. There is a growing buzz around AI’s potential to transform how we communicate. With the advent of tools like Chat-GPT, people are experiencing these advancements first-hand. AI-driven Natural Language Processing (NLP) is at the forefront of this transformation - promising to bridge communication gaps, automate processes and process large amounts of data.

However, these advancements often cater primarily to widely spoken languages like English, leaving many other languages underrepresented. In a country like India with 1.4Bn people, 22+ official languages and numerous dialects, this gap becomes even more evident.

Developing accurate Large Language Models (LLM) for Indian languages has become crucial for advancement. LLMs are essentially machine learning models that are trained to comprehend human language text but they need a large amount of data. These models help in creating effective NLP applications, facilitate multilingual communication and preserve the linguistic diversity.

A large part of India is multilingual. Each state, and sometimes each district in India has its own language or dialect - often with unique scripts, phonetics, and grammatical structures. For instance, Hindi, spoken by over 40% of the population, has a completely different structure and vocabulary compared to Tamil, which is Dravidian in origin and spoken in the southern state of Tamil Nadu.

It is comparatively easier to train computer models for the English language through standard ASCII Codes as compared to other natural languages. However, this is not the case when it comes to Indian languages. Training models to understand and annotate these languages is not only a technological feat but also a cultural endeavor. Maintaining accurate grammar, syntax, cultural relevance and local expressions are challenging whilst annotating Indian languages. Here’s why:

Challenges

1. Tokenization: This is a crucial step for preprocessing text. It involves breaking textual data into words, terms, sentences and symbols etc and assigning “tokens”.

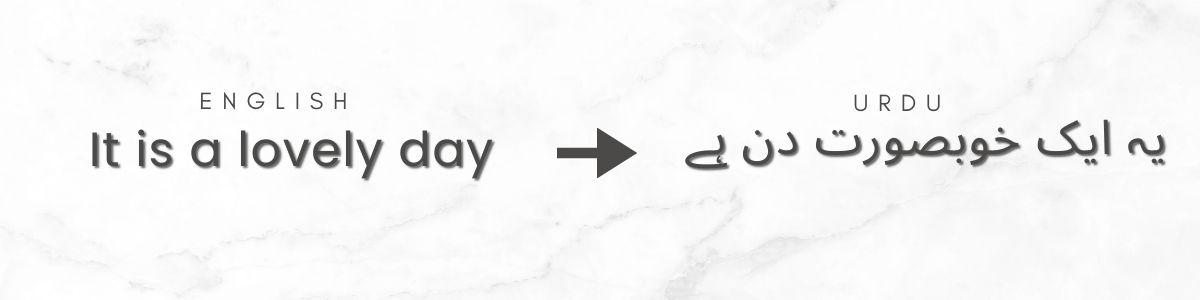

In English, tokenization is relatively straightforward because words are clearly separated by spaces and punctuation. In contrast, languages like Urdu face issues such as space insertion and omission errors. For example, Urdu phrases can be written with inconsistent spacing, like "کتابپڑھ رہا ہے" instead of the correct "کتاب پڑھ رہا ہے," complicating accurate tokenization. There are many open-source tools such as SpaCy, NLTK, Stanford NLP etc available for tokenization of the English language. However, at present, the same doesn't exist for Indian languages.

2. Parts-of-Speech (POS) tagging: This is another important step to building any NLP tool. NLPs are machine learning technologies that have the ability to interpret, manipulate and comprehend human languages. This involves labeling words in a text with their corresponding parts of speech such as nouns, verbs, adjectives etc. POS tagging in Indian languages presents a unique set of challenges due to their linguistic diversity and structural complexity. One major hurdle is the complexity of these languages, where words change extensively based on tense, aspect, mood, gender, number and case. For instance, the Hindi verb "करना" (to do) has multiple forms like "किया" (did) and "करेंगे" (will do), each of which requires accurate tagging.

Many languages such as Hindi, Kannada, Marathi, Malayalam, Tamil and Telugu follow relatively free word order, which further adds to the complexity of annotating. The same sentence can have multiple valid structures such as subject-object-verb (SOV) or object-subject-verb (OSV) as shown in the image below. The ability to rearrange the subject, object, and verb while maintaining grammatical accuracy and meaning adds significant complexity to natural language processing tasks.

Unlike English, which follows a standard word order, NLP models must understand the context of Indian languages to correctly identify the roles of each word. POS tagging mainly uses machine learning algorithms which are trained on large annotated bank of text. For Indian languages a major bottleneck is the non-availability of language resources.

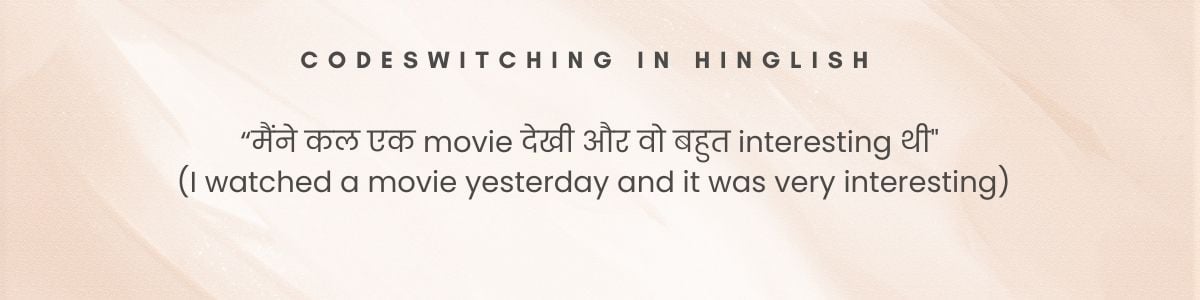

3. Code Switching and Multilingualism: Code-switching involves alternating between two or more languages while in conversation. Almost every person in India, at the very least, is bilingual. It’s a very common practice to mix two languages whilst conversing. The most common mix is between English and regional languages, such as Hinglish (Hindi-English). Annotating such text is challenging because it requires the tagger to match and label the language of each segment and apply accurate linguistic rules.

In this sentence, "movie" and "interesting" are English words embedded in a Hindi sentence. Annotating this requires the system to recognize "movie" as a noun and "interesting" as an adjective in the context of Hindi grammar. Certain documents may even contain a combination of 3 different languages, each with different linguistic rules. Annotating such data requires the system to be aware of all linguistic guidelines which is difficult for resource-poor languages.

4. Script variations: Indian languages have a diverse range of scripts used across different linguistic families. Hindi uses Devnagri script, while Punjabi uses multiple scripts such as Gurmukhi and Shahmukhi - each requiring distinct handling for accuracy. Furthermore, the absence of standardized spellings in many languages complicates the tasks. The unique characteristics of each script add complexity; Telugu, for example, incorporates complex conjunct consonants like "క్ష" (kṣa), which must be correctly identified as single units during annotation.

While these were some of the challenges, there is also some progress made in the annotation of regional Indian languages.

Zero shot learning

Zero-shot learning has revolutionized the annotation of regional Indian languages by enabling models to recognize and annotate words that they have not seen during training. This approach leverages cross-lingual transfer learning and multilingual pre-trained models like mBERT and XLM-R to generalize knowledge from high-resource languages like Hindi to low-resource ones such as Bhojpuri and Manipuri. It has proven effective in tasks like Named Entity Recognition (NER) and sentiment analysis, where models trained on languages like Hindi or English can accurately process Marathi or Bengali text.

2. Advancements in NLP

Anusaaraka, a tool developed for Indian languages, incorporates deep linguistic analysis to accurately tokenize and tag words based on their morphological context, enhancing the reliability of NLP applications. LLMs like mBert are pre-trained on multilingual datasets including Indian languages. To further fine tune it for Hindi, a corpus of Hindi text is used. The model adjusts its weights based on this new data, improving its performance on tasks like tokenization, POS tagging, and named entity recognition for Hindi.

3. Government Initiatives

The Government of India has launched several initiatives to promote annotation and digitalization of Indian languages. The National Digital Library of India (NDLI), BharatNet are super helpful for extensive digital resources and building the foundation for AI training. Furthermore, the Universal Networking Language (UNL) initiative aims to create a comprehensive linguistic database, facilitating cross-language information exchange and standardized annotation practices across languages.

To conclude, while there are still significant challenges to overcome for Indian languages, there has also been notable progress. In-depth research and advancements in technology have shown promising results in the development of NLPs for Indian languages in the years to come.

Shrushti Chhapia

Shrushti Chhapia co-founded eLanguageWorld in 2013 with a vision to showcase the rich diversity of Indian languages to the world. Her technical expertise, combined with her passion for languages, has been instrumental in the success of eLanguageWorld. eLanguageWorld is a women-first company. Her leadership is a testament to the power of determination and a commitment to empower women.