- Home

- Resource Center

- Articles & Videos

- Situational Awareness in Machine Interpreting

22 January 2024

| by Globalization and Localization Association

Situational Awareness in Machine Interpreting

Machine Interpreting, a subset of spoken language translation, is undergoing rapid advancements. The recent strides in this domain are particularly evident in the development of robust end-to-end systems. These systems utilize a singular language model to directly translate spoken content from one language to another. As impressive as this technology is, it currently finds its best application only in offline speech translation tasks. When it comes to real-time simultaneous translation, which is my primary area of interest, cascading systems with their multifaceted components and possible configurations remain the gold standard. Despite their inherent complexity and intrinsic limitations, cascading systems present a distinct advantage: they are adept at incorporating the latest innovations in Generative AI. This compatibility paves the way for immediate enhancements in speech translation quality.

Getting AI to Read Between the Lines

I recently gave an interview to El País where I argued that one of the biggest challenges of real-world speech translation can be solved, at least to a certain extend, with Large Language Models (LLM) like ChatGPT or LLama2. The challenge I’m addressing is the capacity to translate in a manner that’s informed by the communicative context, necessitating a form of “understanding” of the contingent situation. I employ the term “understanding” in quotes, given its contentious nature and the absence of a universally accepted definition. For our purposes, let’s define understanding as the capability to amass enough knowledge to enable the system to respond coherently and aligned to the communicative context. This encompasses skills like basic co-reference resolution (identifying who is speaking to whom, their gender, status, role), adjusting terminology, register, and style (speaking as an expert versus a layperson), and discerning implied meanings beyond literal statements (inferring subtext, intent, etc), to mention a few. Traditional Neural Machine Translation (NMT) falls short in these areas. Conversely, and notwithstanding their intrinsic limitations, the reasoning and in-context learning prowess of LLMs have showcased remarkable proficiency in these domains. Thus, they could be pivotal in aiding speech translation to transcend its primary constraint: the lack of inherent ties to the communicative context. Needless to say, this paves the way for a more enriched translation experience.

Enhancing Speech Translation Through LLMs

If you’ve extensively interacted with an advanced large language model (comparable to GPT-3.5-turbo, for instance), its potential becomes clear. Dissect a communicative act into its core components. As the act progresses and a participant introduces new information, evaluate the likelihood of specific actions being taken by either party. Probe the model about the speakers’ intentions, predict the potential trajectory of the conversation, and, with sufficient contextual information at hand, you’ll observe the intriguing insights an LLM can glean from such data. This is the basis of what I call situational awareness (different to the “higher” level of awareness as described here).

This capability warrants exploration. At present, my research is geared towards leveraging Large Language Models to:

- Disambiguate meaning through context.

- Comprehend and continuously increase the knowledge about the communicative event.

- Assess the system’s confidence for the gained knowledge.

- Trigger translation decisions based on this understanding of the communication.

This process presents fascinating challenges across various dimensions. From a computer science perspective, the question arises: how deeply can an LLM comprehend a communicative event, and what measures can we take to aid its understanding? On the translation front, once we’ve amassed sufficient contextual data, how can we harness it to enhance machine interpreting strategically?

Integrating Frame Semantics for Contextual Awareness

The methodology I’m developing to address the initial aspect of this challenge draws inspiration from Frame Semantics, a theory formulated by Charles J. Fillmore in the 1970s. This theory relates linguistic semantics with encyclopedic knowledge. Within this framework, employing a word in a novel context means comparing it with past experiences to see if they match in meaning. Fillmore elucidates this using the notions of scenes and frames. The term “frame” denotes a collection of linguistic options or constructs, which in turn evokes a mental representation or “scene”. Fillmore characterizes a scene as any recognizable experience, interaction, belief, or imagination, whether visual or not. Scenes and frames perpetually stimulate one another in patterns like frame-to-scene, scene-to-frame, scene-to-scene, and frame-to-frame. Specifically, the activation process pertains to instances where a distinct linguistic structure, such as a clause, triggers associations. These associations then prompt other linguistic structures and incite additional associations. This interplay ensures every linguistic element in a text is influenced by another, facilitating the extraction or even construction of meaning from linguistic statements. Essentially, it fosters understanding—or interpretation—of the situation. My ambition is to synthetically instigate and regulate this interplay between scenes and frames using an LLM, aiming to infuse contextual awareness into the translation procedure.

Challenges and Opportunities in Situational Awareness

Speech translation offers an ideal setting to explore this approach. It bears similarities to conversation design—arguably its most direct application—yet comes with the advantage of more straightforward evaluation criteria and metrics. In my working hypothesis, for an LLM to significantly enhance the translation process, it needs to transform into a communicative agent adept at discerning the nuances, logic, and dynamics of real-world scenarios, and then channel this understanding into the translation. This is no small feat, given the current limitations of LLMs, on the one hand, and the complexity of real-life communication, especially multilingual, on the other. LLMs’ understanding is grounded solely in the insights text can offer. They lack for example the ability to process visual indicators and, vitally for speech translation, they are unable to decode nuances from acoustic cues like prosody. Undoubtedly, this expanding list of challenges, which are nowadays limitations, is crucial for effective oral communication.

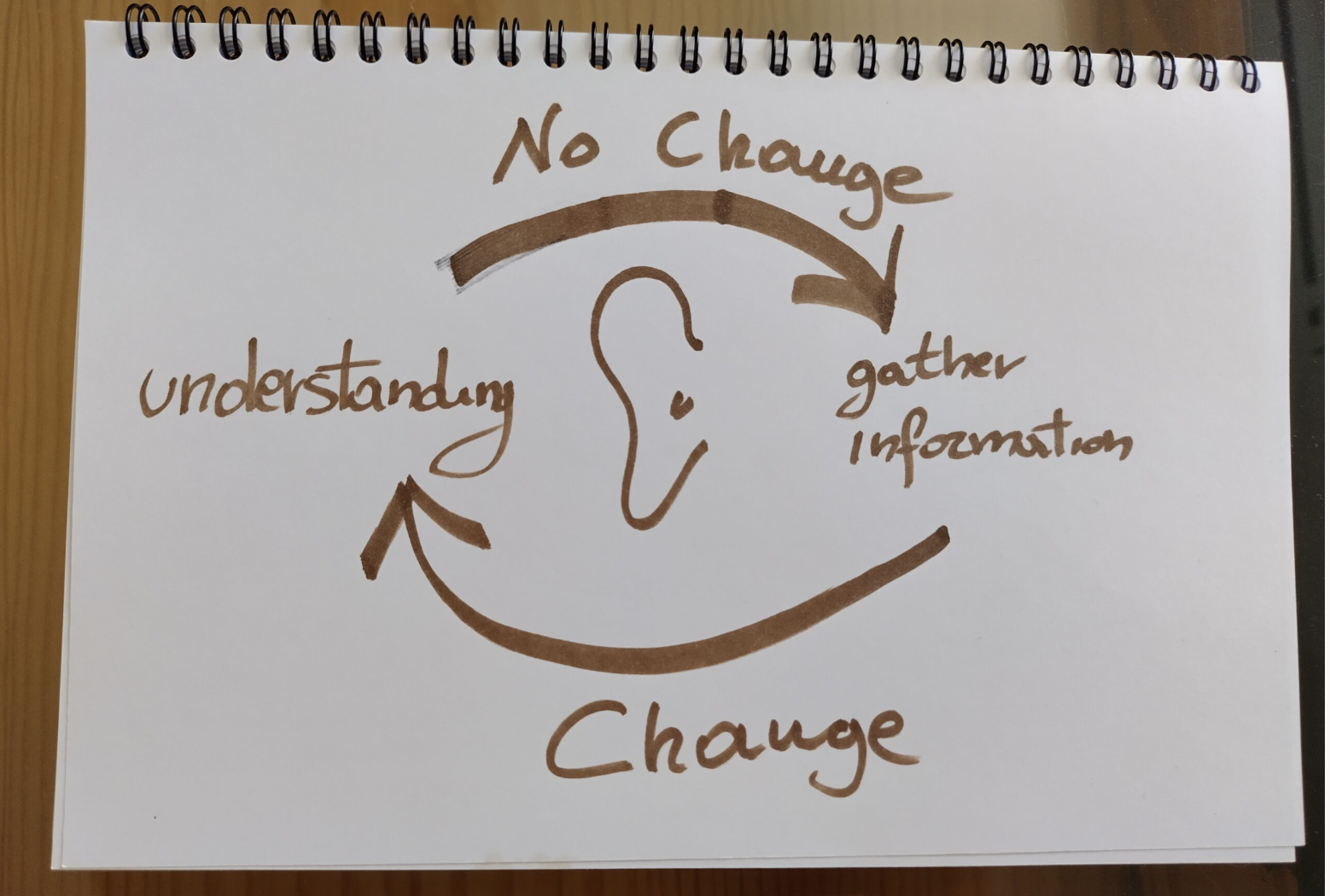

Deciphering Linguistic Input

Yet, LLMs ability in deciphering pure linguistic input is commendable as it is the amount of knowledge that can derived by simple inference. It’s astonishing how good LLMs can extract insights from minimal and often partial input (the frame). The availability of specific contextual data (the scene) enhances top-down the agent’s comprehension of the situation, creating a continuous feedback loop of scene-frame-scene activations. Interestingly, while an initial understanding of the scene is vital to initiate this loop, and must therefore be forced externally for example by describing the general communicative setting, the scene will be progressively and automatically enriched by integrating new frames, as the communication evolves. This, in turn, allows the agent to autonomously adapt to the evolving communicative context. This is situational awareness at play.

Ensuring Precision in Linguistic Processing

Let’s be clear, this approach isn’t without its challenges. Since LLMs primarily operate on linguistic surface structures, the frame/scene activations can easily go astray. I am not referring to the well know hallucinations, but to glaring misinterpretations of ongoing conversations. Humans possess robust control mechanisms to prevent such deviations, and to allow the interlocutor (or the interpreter in our specific case) to remain aligned with the unfolding of the communicative situation. Surely, also humans are not perfect here, and “miscommunication” or “misunderstanding” happens all time. But these mechanisms are very intricate and they remain, as of now, particularly challenging to emulate by computers. Let’s not forget that we are not aiming at perfection, but at climbing this ladder of complexity one step at a time.

Harnessing Insights for Real-Time Translation

Now, provided we have obtained some level of “understanding” of the communication by means of scenes and frames activations, the pressing issue becomes how to harness these insights to improve translation. And how to do this in real-time, i.e. without knowing the full context of the conversation (which incidentally is one of the other peculiar challenges of machine interpreting).

Two primary approaches surface: the implicit and the explicit one. They can coexist harmoniously. But let’s briefly consider them separately. The implicit strategy involves using the LLM to both grasp the context and simultaneously adapt the translation based on this comprehension. Essentially, the LLM offers directly – i.e. without external intervention – a more contextually appropriate translation due to its inherent processes. We could already demonstrate impressive improvements (around 25% depending on language combinations) by simply injecting a LLM into the translation pipeline and crafting instructions aligned with the task at stake.

While this method is straightforward and produces visible improvements, I find it less captivating, and it’s not without its drawbacks. More fascinating is the explicit strategy. Here we seek to extract insights from the scene/frame activations and utilize this meta-linguistic information to steer the translation process, i.e. by embedding this knowledge into dynamic prompt sequences. This bears resemblance to both In-Context Learning and the Chain-of-Thought Prompting technique, but necessitates significant modifications to address the unique challenges posed by spoken translation, which are too extensive to delve into here.

Do you want to contribute with an article, a blog post or a webinar?

We’re always on the lookout for informative, useful and well-researched content relative to our industry.

Claudio Fantinuoli

Claudio is Chief Technology Officer at KUDO Inc., a company specialized in delivering human and AI live interpretation. The latest product is the KUDO AI Translator, a real-time, continuous speech-to-speech translation system. Claudio is also Lecturer and Researcher at the University of Mainz and Founder of InterpretBank, an AI-tool for professional interpreters.